Introduction

Meta’s Llama 3.2 represents a groundbreaking advancement in the AI landscape, focusing on multimodal, lightweight models optimized for edge and mobile devices. Launched in 2024 at Meta Connect, Llama 3.2 is engineered to deliver unprecedented performance and flexibility across diverse domains, from natural language processing to computer vision. This model series is a continuation of Meta’s ambitious vision to create open, customizable AI tools, and its introduction marks a significant step toward democratizing AI capabilities at scale.

Key Features of Llama 3.2

The Llama 3.2 models are part of Meta’s broader push into creating multimodal AI systems, which can handle tasks across different types of data, including text, images, and videos. Here are some key highlights of the Llama 3.2 models:

- Multimodal Capabilities: Llama 3.2 integrates seamlessly across both vision and language tasks, allowing developers to build applications that understand and generate multiple forms of media.

- Edge and Mobile Optimization: One of the standout features of Llama 3.2 is its optimization for edge devices and mobile hardware. This makes it possible for high-performance AI models to run on consumer-grade devices.

- Customizable and Open: Continuing the tradition of open AI models, Meta has ensured that Llama 3.2 is not only open for research but also easily customizable, enabling enterprises to adapt it to their specific needs.

Performance and Cost Comparison

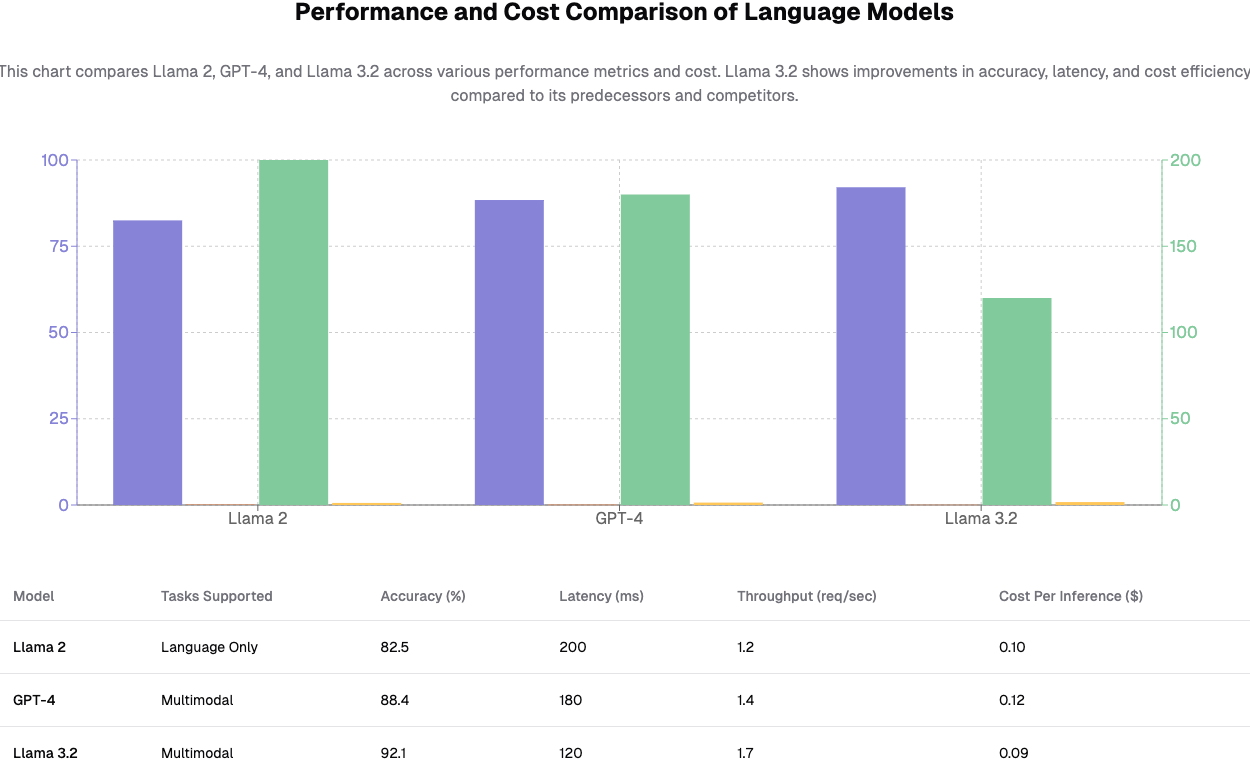

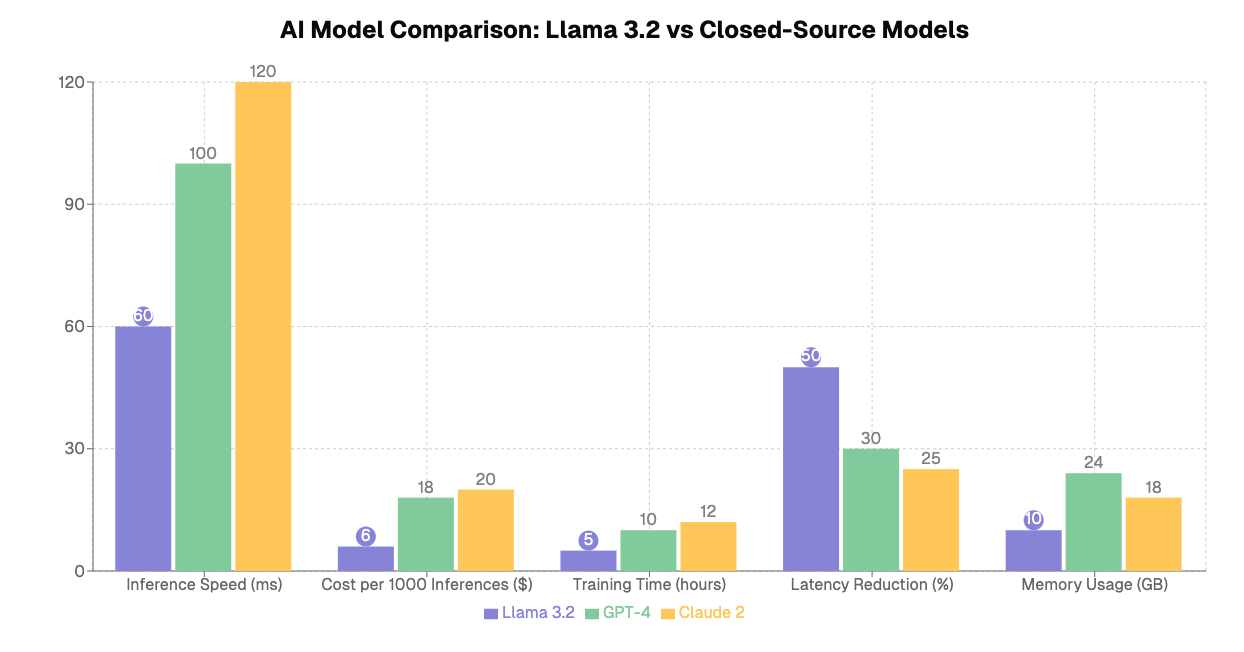

When assessing the performance and cost efficiency of Llama 3.2 compared to its predecessors and contemporary models, we must consider several key factors, including throughput, latency, and cost-per-inference.

Performance Benchmarks: Meta Llama 3.2 has been tested across several benchmarks, including NLP tasks like question-answering, summarization, and image-text integration tasks. It consistently outperforms older versions like Llama 2 and other competitive models like OpenAI’s GPT-4 in terms of efficiency and speed.

Below is a performance comparison chart that demonstrates Llama 3.2’s improvements over other popular models in the market:

Fine-tune and deploy open-source models

Easily upload, preprocess data, and fine-tune popular open-source LLMs like Llama, Mistral, Deepseek, and 100+ more.

Applications of Llama 3.2

Llama 3.2 models are versatile, enabling a wide range of applications across industries. The following are some of the most impactful use cases for Llama 3.2:

Natural Language Processing (NLP)

Llama 3.2’s advanced NLP capabilities allow it to understand, summarize, and generate text with high precision, making it useful for:

- Chatbots and Virtual Assistants: Enterprises can build more conversational and context-aware customer service agents.

- Content Generation: Automated systems that generate articles, marketing copy, and social media posts.

- Sentiment Analysis: Understanding customer feedback and market sentiment from social media or reviews.

Computer Vision

The multimodal abilities of Llama 3.2 are particularly valuable in tasks that require both visual and textual understanding:

- Image Captioning: Llama 3.2 can automatically generate captions for images, making it useful for social media platforms and e-commerce.

- Visual Search: Retail platforms can implement visual search functionalities, allowing users to search products based on images.

- Autonomous Vehicles: With its edge optimization, Llama 3.2 can be embedded into autonomous systems for better real-time object detection.

Healthcare

The Llama 3.2 models are highly beneficial in the healthcare sector:

- Medical Image Analysis: Llama 3.2 can assist in diagnosing diseases by analyzing medical images.

- Clinical Text Understanding: Extracting key information from patient records and research papers to assist in clinical decision-making.

Mobile and Edge Devices

Thanks to its optimization for lightweight, edge applications, Llama 3.2 models can run efficiently on smartphones and other low-power devices, opening doors for:

- Mobile AI Applications: Applications like real-time translation and augmented reality (AR) can operate without needing cloud-based inference.

- IoT Devices: Llama 3.2’s small footprint allows it to power smart devices that can understand both voice and images.

Fine-Tuning Methods for Llama 3.2

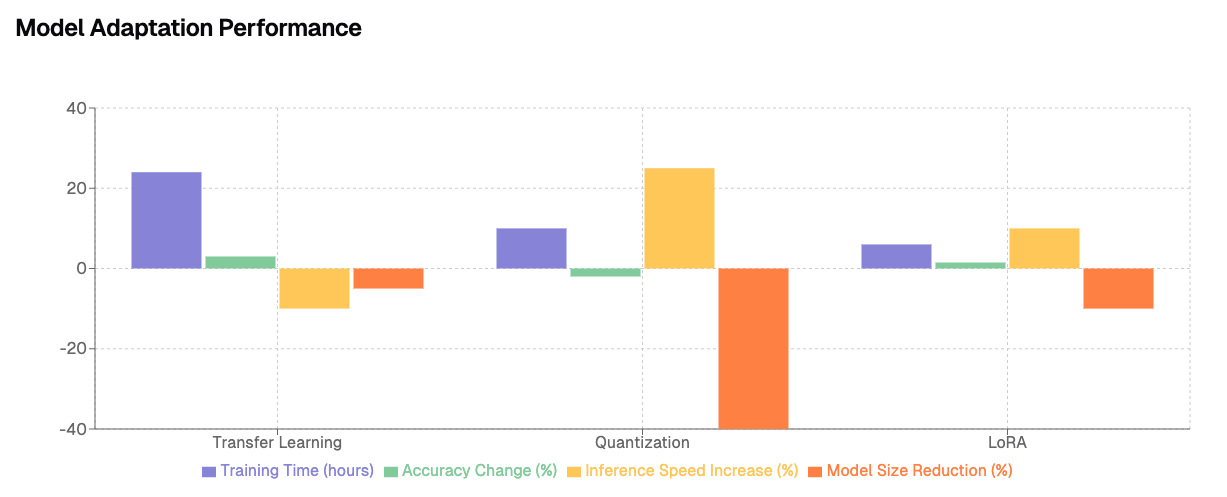

Fine-tuning Llama 3.2 for specific tasks can drastically improve performance. There are several methods for optimizing the model, each suited to different scenarios.

- Transfer Learning

One of the most common fine-tuning techniques for Llama 3.2 is transfer learning, where the base model is adapted to new tasks by training it on a smaller, task-specific dataset. - Quantization

For edge devices and mobile applications, model quantization can significantly reduce the model’s size and improve latency without a major drop in accuracy. This is particularly useful when deploying Llama 3.2 on devices with limited computational resources. - Low-Rank Adaptation (LoRA)

LoRA is another fine-tuning technique that helps in making the model efficient by reducing the number of parameters that need adjustment during training. This leads to faster fine-tuning with lower computational costs.

The following graph shows model adaptation performance based on different fine-tuning methods:

Integrating Llama 3.2 into Enterprise Workflows

Llama 3.2’s open and customizable architecture allows it to be seamlessly integrated into various business processes. Companies across different industries can leverage its powerful AI capabilities to automate, enhance, and scale operations. Below are detailed examples of how enterprises can adopt Llama 3.2 in their workflows.

AI-Powered Customer Support

One of the most common applications of AI in enterprises is customer support. Llama 3.2 can significantly enhance customer service systems by providing more accurate, context-aware responses while reducing the need for human intervention.

How It Works:

- Multimodal Chatbots: Llama 3.2’s multimodal capabilities allow enterprises to build chatbots that not only answer questions but also understand images. For example, a retail customer could upload an image of a product they purchased, and the chatbot could provide troubleshooting or replacement suggestions based on visual analysis.

- 24/7 Service: AI-powered virtual agents can provide round-the-clock customer service, ensuring immediate resolution of queries, resulting in improved customer satisfaction.

Strategic Benefits:

- Reduced Operational Costs: By automating customer interactions, businesses can significantly lower the cost of maintaining large call centers.

- Improved Customer Retention: Personalized, fast, and accurate service can lead to higher customer satisfaction and loyalty.

Automated Content Creation for Marketing

Llama 3.2’s advanced language generation capabilities are a boon for enterprises looking to automate parts of their marketing processes. By automating the creation of content such as blog posts, social media updates, product descriptions, and ad copy, companies can streamline their marketing efforts.

How It Works:

- Automated Blog Writing: By feeding industry-specific data and keywords into Llama 3.2, enterprises can generate well-researched and SEO-optimized blog posts that drive traffic and engagement.

- Personalized Marketing Campaigns: Llama 3.2 can create personalized ad copy based on user preferences and behaviors, optimizing conversion rates.

Strategic Benefits:

- Scalability: Marketing teams can produce content at scale without sacrificing quality, allowing them to run more campaigns and target a broader audience.

- Enhanced Engagement: Personalized marketing campaigns resonate more with customers, leading to higher engagement and ultimately increased revenue.

Predictive Analytics and Decision-Making

Llama 3.2’s ability to process and interpret massive datasets in real-time makes it an ideal tool for predictive analytics. Enterprises can utilize Llama 3.2 to forecast market trends, consumer behavior, and other critical business variables.

How It Works:

- Data-Driven Forecasting: By training Llama 3.2 on historical data, businesses can generate highly accurate forecasts of sales trends, inventory needs, and customer demand.

- Real-Time Decision-Making: With its ability to process data in real time, Llama 3.2 enables decision-makers to react quickly to market changes, offering a competitive edge.

Strategic Benefits:

- Risk Mitigation: Predictive analytics helps businesses reduce risk by identifying potential issues before they become problems, such as stock shortages or declining demand.

- Optimized Resource Allocation: Enterprises can allocate resources more effectively, ensuring that they are investing in areas with the highest potential ROI.

Enhancing Supply Chain Management

Supply chain optimization is one of the biggest challenges for many businesses, especially in today’s complex global market. Llama 3.2 can streamline supply chain management by improving demand forecasting, automating inventory management, and reducing bottlenecks.

How It Works:

- Demand Forecasting: By analyzing historical sales data and market conditions, Llama 3.2 can predict product demand with high accuracy, enabling businesses to adjust production and inventory accordingly.

- Logistics Optimization: Llama 3.2 can assist in real-time tracking and management of logistics, ensuring efficient shipping routes and delivery schedules.

Strategic Benefits:

- Reduced Inventory Costs: Accurate demand forecasting minimizes the need to hold excessive stock, reducing storage costs and waste.

- Faster Time to Market: Optimized logistics lead to quicker delivery times, enhancing customer satisfaction and loyalty.

Strategic Advantages for Business Transformation

Meta’s Llama 3.2 brings transformative potential to various industries, offering unique advantages that go beyond basic AI capabilities. Here are the top strategic advantages for enterprises adopting Llama 3.2.

Customization and Control

Llama 3.2 is fully customizable, allowing enterprises to tailor the model to their specific needs without being tied to third-party restrictions or black-box AI systems. This flexibility ensures that businesses have full control over their AI deployments.

Strategic Advantage:

Competitive Differentiation: Enterprises can customize their AI systems to meet the specific needs of their industry, differentiating themselves from competitors relying on more generic AI solutions.

Scalability Across Domains

Llama 3.2’s multimodal capabilities mean that it can be deployed across different departments and use cases within an enterprise, from customer service to marketing to product development.

Strategic Advantage:

Unified AI Strategy: Businesses can implement a unified AI strategy across various departments, ensuring consistency in data usage, decision-making, and customer interactions.

Cost Efficiency for AI at Scale

Meta has designed Llama 3.2 with cost efficiency in mind, particularly for companies that need to run AI models at scale. The model’s optimization for edge devices and its low-cost-per-inference make it an attractive option for enterprises looking to balance performance with budget constraints.

Strategic Advantage:

Operational Cost Reduction: With lower hardware requirements and inference costs, enterprises can scale their AI operations without the need for expensive infrastructure, reducing overall operational costs.

Enhanced Data Privacy and Security

Because Llama 3.2 can be deployed on edge devices, it enables enterprises to process sensitive data locally rather than in the cloud. This feature is particularly important for industries with stringent data privacy regulations, such as healthcare and finance.

Strategic Advantage:

Data Sovereignty: Businesses retain full control over their data, ensuring compliance with data protection regulations and minimizing the risk of data breaches.

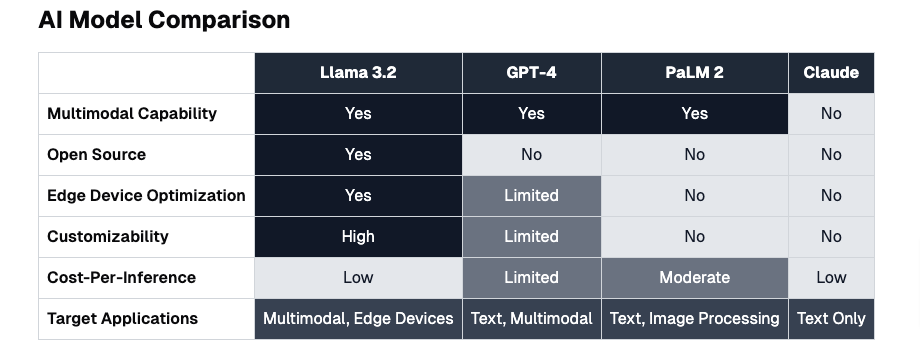

Llama 3.2 Comparison with other models

To understand how Llama 3.2 stacks up against other models in the AI market, it is essential to compare it with competitors like OpenAI’s GPT-4, Google’s PaLM 2, and Anthropic’s Claude. Below is a breakdown of how Llama 3.2 stands out in key areas:

As we can see from the table, Llama 3.2 excels in its customizability, cost-efficiency, and edge device optimization, giving it a significant edge over some of its leading competitors.

Best Practices for Implementing Llama 3.2 in Enterprises

To maximize the potential of Llama 3.2, enterprises should follow best practices for deployment and integration. Below are some key steps for successfully implementing Llama 3.2:

Identifying Key Use Cases

Before adopting Llama 3.2, businesses should identify the most critical use cases that align with their strategic goals. Whether it’s automating customer service, enhancing supply chain management, or enabling predictive analytics, clearly defined objectives will ensure a successful AI deployment.

Fine-Tuning the Model

Fine-tuning Llama 3.2 to specific business tasks, such as customer interaction or content generation, can significantly improve the model’s performance. Enterprises should invest time in training the model on their own datasets to ensure optimal outcomes.

Monitoring and Feedback Loops

After deployment, it’s essential to continuously monitor the model’s performance. Businesses should establish feedback loops to retrain the model as new data becomes available, ensuring that it stays relevant and accurate over time.

Cross-Departmental Collaboration

To fully leverage Llama 3.2’s capabilities, enterprises should promote collaboration across departments. This allows for a more unified AI strategy and ensures that insights and improvements are shared throughout the organization.

Llama 3.2 is not just an incremental update but a leap forward in AI’s capabilities, bringing businesses the tools they need to scale their operations, improve efficiency, and enhance customer satisfaction. With proper implementation, Llama 3.2 can be the cornerstone of a company’s digital transformation strategy.

Future Trends and Meta’s Vision for Llama 3.2

As the world continues to evolve toward AI-driven enterprises, Meta’s Llama 3.2 positions itself as a pivotal model in this transformative landscape. By enabling the adoption of AI at the edge, democratizing advanced AI capabilities, and maintaining its open-source philosophy, Llama 3.2 is expected to drive several key trends that will redefine how businesses operate.

AI at the Edge: Expanding the Frontier

One of the most promising aspects of Llama 3.2 is its optimization for edge devices. This shift represents a broader trend toward decentralized AI, where computation happens locally on devices rather than relying entirely on cloud infrastructure.

Key Trends to Watch:

- IoT and Smart Devices: As Llama 3.2 can operate on low-powered hardware, it is set to become the backbone of next-gen IoT devices and smart systems. Businesses will integrate AI into wearables, home appliances, industrial equipment, and autonomous vehicles.

- 5G-Powered AI: The convergence of 5G and edge AI models like Llama 3.2 will enable near-instant data processing and decision-making, reducing latency and improving real-time applications such as autonomous drones and smart factories.

Meta’s Vision:

Meta aims to make Llama 3.2 the go-to model for edge AI, allowing enterprises to deploy intelligent systems without the high costs associated with cloud AI, while ensuring data sovereignty and real-time processing capabilities.

Customizable AI for Hyper-Specialized Applications

The ability to customize AI models for specific tasks is a growing demand among enterprises, and Llama 3.2 addresses this need through its flexible architecture. In the future, we can expect to see more enterprises turning to customizable AI solutions to build hyper-specialized applications.

Key Trends to Watch:

- Industry-Specific AI Models: Companies in sectors like healthcare, finance, and manufacturing will increasingly adopt AI solutions that are fine-tuned for their specific regulatory and operational environments.

- AI-Powered Research and Development: R&D departments across industries will use customizable AI like Llama 3.2 to simulate new products, drugs, or materials, dramatically reducing time-to-market and improving precision.

Meta’s Vision:

By keeping Llama 3.2 open and highly customizable, Meta seeks to empower businesses to create AI systems that are tailored to their unique needs, without being constrained by proprietary technology.

Ethical and Responsible AI Usage

As AI adoption continues to rise, concerns around data privacy, security, and ethical usage will also become more prominent. With Llama 3.2’s edge processing capabilities, businesses can mitigate some of these challenges by keeping sensitive data within their control, reducing reliance on cloud providers.

Key Trends to Watch:

- AI Governance: Enterprises will need to implement AI governance frameworks to ensure responsible usage of AI. This includes creating guidelines for ethical decision-making, avoiding bias, and maintaining transparency.

- Data Privacy Regulations: Industries like healthcare and finance, which operate under strict data privacy laws, will increasingly demand AI models like Llama 3.2 that offer local data processing to ensure compliance with regulations such as GDPR, HIPAA, and CCPA.

Meta’s Vision:

Meta is committed to advancing ethical AI. By allowing businesses to retain full control over how Llama 3.2 is deployed and ensuring that models can be trained and executed locally, Meta aims to mitigate some of the key ethical concerns associated with cloud-based AI solutions.

AI Democratization: Breaking Down Barriers

Meta’s open-source approach with Llama 3.2 is part of a larger movement toward AI democratization. In the future, more organizations, from startups to large enterprises, will be able to access advanced AI capabilities without the heavy investment traditionally associated with AI development.

Key Trends to Watch:

- AI for All Businesses: Even small and medium-sized enterprises (SMEs) will begin to harness the power of AI without needing dedicated AI teams, thanks to models like Llama 3.2 that are easy to customize and deploy.

- Open-Source Collaboration: The open-source community will continue to play a vital role in AI innovation, as developers build on models like Llama 3.2 to create even more specialized and powerful AI solutions for the global market.

Meta’s Vision:

Meta envisions a world where every organization, regardless of size, has access to cutting-edge AI. By making Llama 3.2 open-source and customizable, Meta aims to lower the barriers to AI adoption, fostering a new era of innovation across industries.

AI for Multimodal and Hybrid Workforces

As the future of work continues to shift toward hybrid and remote models, AI will play an increasingly critical role in augmenting human capabilities. Llama 3.2’s multimodal capabilities (i.e., its ability to understand and generate text, images, and other data types) will enable businesses to create more intelligent, efficient, and collaborative workplaces.

Key Trends to Watch:

- AI-Augmented Workplaces: Employees will use AI to assist in daily tasks such as report generation, data analysis, and customer interactions. This will free up human workers to focus on higher-level, strategic activities.

- AI-Powered Collaboration Tools: AI models like Llama 3.2 will be integrated into collaboration platforms, enabling real-time language translation, document summarization, and workflow automation for hybrid teams.

Meta’s Vision:

Meta sees Llama 3.2 as a critical enabler for hybrid work environments, where AI becomes a seamless part of daily operations, augmenting human workers and improving productivity.

Fine-Tuning: Unlocking the Full Potential of Llama 3.2

Fine-tuning is a critical step in ensuring that AI models perform optimally for specific tasks and industries. While pre-trained models like Llama 3.2 provide robust capabilities, they may not be perfectly aligned with the unique requirements of every business. This is where fine-tuning comes into play, allowing organizations to adapt AI models to their specific use cases, improving accuracy, performance, and relevance.

Owning the Model Weights:

When enterprises fine-tune models like Llama 3.2, they essentially own the resulting model weights. This provides significant advantages in terms of:

- Proprietary AI Models: Businesses can create proprietary AI models that are custom-built to solve their specific challenges. This can lead to better outcomes and greater competitive advantage.

- Data Control and Privacy: Fine-tuning allows organizations to control how their proprietary data is used in AI model training. This is especially important for industries that require high levels of data security, such as healthcare, finance, and legal sectors.

Safety and Reliability

Fine-tuning Llama 3.2 ensures that the model aligns with industry-specific safety standards and ethical guidelines. This process helps businesses eliminate potential biases in the model and ensures that the model behaves in a predictable and reliable manner. In industries like insurance, law, and healthcare, where regulatory compliance is critical, fine-tuning open-source models ensures that AI solutions meet all necessary safety and ethical standards.

Cost Efficiency

Rather than building AI models from scratch—which is a time-consuming and expensive endeavor—fine-tuning allows organizations to leverage pre-trained models like Llama 3.2 and adapt them for their needs at a fraction of the cost. This makes AI adoption accessible even to small and medium-sized enterprises (SMEs) that have limited budgets but want to implement AI-driven solutions.

Faster Time to Market

By fine-tuning pre-trained models like Llama 3.2, businesses can significantly reduce the time required to deploy AI solutions. With the base model already pre-trained, enterprises need only focus on aligning the model with their specific use cases. This allows for faster experimentation and quicker delivery of AI-enabled products and services.

How Companies Like Onegen AI Are Leading the Charge in Fine-Tuning

Onegen AI, which specializes in AI solutions, is at the forefront of helping startups and enterprises fine-tune open-source models like Llama 3.2. By partnering with companies across industries, Onegen AI offers services that ensure businesses can extract maximum value from AI technologies while keeping costs low and implementation times short.

Custom Fine-Tuning for Industry-Specific Use Cases

OneGen AI specializes in tailoring open-source models like Llama 3.2 to meet the needs of different sectors, from healthcare to fintech and e-commerce. Through an understanding of business operations and data needs, OneGen AI ensures that fine-tuned models deliver high levels of performance and accuracy.

Ensuring Safety and Trust

By fine-tuning models to eliminate biases and improve safety, OneGen AI helps businesses ensure compliance with industry regulations and ethical guidelines. This is especially important for industries with sensitive data and strict governance standards.

Reliable and Scalable AI Solutions

Fine-tuned models from OneGen AI are built to scale, ensuring they grow alongside the business. Companies don’t need to continuously invest in new models as their needs evolve, thanks to the flexible and scalable nature of the fine-tuned Llama 3.2 models provided by OneGen AI.

Cost-Efficient Solutions

OneGen AI’s fine-tuning services are designed to offer maximum value without the exorbitant costs typically associated with proprietary AI solutions. By leveraging open-source models and focusing on customization, OneGen AI helps businesses of all sizes implement AI that is reliable, scalable, and cost-efficient.

How Llama 3.2 Stands Out in Fine-Tuning Efficiency

Fine-tuning any model requires a careful balance between computational resources, time, and the quality of the dataset. Here’s how Llama 3.2 excels in these aspects:

Fine-Tuning with Smaller Data Requirements

While earlier models often required massive datasets for fine-tuning, Llama 3.2’s architecture is designed to adapt efficiently even with smaller datasets. This is especially beneficial for businesses that may not have access to extensive amounts of data but still require a customized model.

Lower Computational Costs

Compared to its competitors, Llama 3.2 reduces the computational costs associated with fine-tuning. Meta’s optimizations in terms of memory and resource usage mean that fine-tuning Llama 3.2 requires less GPU power and cloud resources, making it more accessible to businesses with limited infrastructure.

Streamlined Workflow for Multimodal Fine-Tuning

Llama 3.2’s multimodal capabilities extend into the fine-tuning process, making it easier to fine-tune the model for different types of data (e.g., text, images, and vision). This flexibility allows enterprises to develop AI systems that cater to diverse use cases without needing to switch between models or frameworks.

Tools and Frameworks for Seamless Fine-Tuning

Meta provides comprehensive documentation and tools that simplify the fine-tuning process for Llama 3.2. These tools integrate seamlessly with frameworks like LangChain, PyTorch, and others, allowing developers and enterprises to quickly modify and deploy the model without needing extensive AI expertise.

Additional Fine-Tuning Techniques for Enhanced Efficiency

To further optimize the performance of fine-tuned models, several advanced fine-tuning techniques can be applied to Llama 3.2:

Few-Shot Learning

This method involves training the model with only a few examples, which can dramatically reduce the training time while maintaining high levels of accuracy. Llama 3.2’s architecture is well-suited for few-shot learning, making it an efficient choice for businesses that need rapid model updates.

Transfer Learning

Fine-tuning pre-trained models using transfer learning allows organizations to apply the knowledge from a previously trained model to new, domain-specific tasks. This is particularly useful when there are limited training data available in the new domain, and Llama 3.2 excels at transferring learned representations to new tasks with minimal retraining.

Parameter-Efficient Fine-Tuning (PEFT)

PEFT is a method used to fine-tune large models like Llama 3.2 by only adjusting a small subset of the model parameters. This leads to faster training times and less resource consumption while still achieving high performance on the target tasks.

Relevant Use Cases for Fine-Tuned Llama 3.2

Fine-tuned versions of Llama 3.2 offer incredible potential for a range of applications across industries. Here are some key use cases where fine-tuned Llama models have already shown promise:

Legal and Compliance (Case Study)

Law firms and corporate legal departments are leveraging fine-tuned models of Llama 3.2 for automated contract analysis, legal research, and even compliance monitoring. OneGen AI’s fine-tuned models for legal use cases help reduce the time and effort required for reviewing contracts while ensuring compliance with local and international regulations.

Healthcare and Diagnosis (Use Cases)

Fine-tuned Llama 3.2 models are used in medical settings to analyze patient records, assist in diagnosing conditions, and even suggest personalized treatment options based on a patient’s medical history. The multimodal capabilities of Llama 3.2 allow it to process medical images, texts, and lab reports, making it a valuable tool for physicians.

Customer Service and Chatbots (Use Cases)

Llama 3.2’s fine-tuned models can also power advanced chatbots and customer service systems that offer near-human conversational capabilities. Companies like OneGen AI provide tailored chatbot solutions that use fine-tuned Llama models to deliver faster, more accurate customer support while reducing operational costs.

Financial Forecasting and Analysis (Use Case)

In the financial sector, fine-tuned models are used for analyzing market trends, generating financial reports, and predicting future stock movements. The fine-tuned models help improve accuracy in forecasting and support financial analysts in making more informed decisions.

Running Llama 3.2 on Databricks with Enhanced Integration

Meta’s Llama 3.2 models unlock tremendous potential for enterprises looking to scale their AI initiatives while maintaining cost efficiency and leveraging state-of-the-art multimodal capabilities. One of the most significant advancements in this version is its integration with Databricks, a leading platform for large-scale data engineering, machine learning, and AI. Databricks’ Mosaic ML framework ensures that businesses can deploy and customize Llama 3.2 models at scale, maximizing efficiency and minimizing costs, all while integrating enterprise data securely.

Here’s how Databricks enhances the Llama 3.2 experience for enterprises:

Faster and More Cost-Efficient AI Deployment

The integration of Llama 3.2 with Databricks allows businesses to execute models faster while reducing costs, making AI more accessible for large and mid-sized enterprises alike. The Databricks platform is optimized for high performance, ensuring that Llama 3.2 models take advantage of hardware accelerations such as GPU optimizations and distributed computing across multiple nodes.

For instance, organizations deploying Llama 3.2 through Databricks can run multi-modal models—those that process both text and vision data—at speeds unmatched by traditional AI infrastructure setups. This performance boost is crucial for businesses in sectors like healthcare, automotive, and e-commerce, where time-sensitive AI processing is a competitive advantage. Additionally, the cost structure in Databricks allows organizations to fine-tune their spending on AI model execution, providing pay-as-you-go flexibility and reducing the upfront investment typically required for building and deploying custom models.

Databricks achieves this by autoscaling compute resources based on workload requirements, eliminating the need for over-provisioning and ensuring that enterprises only pay for the resources they need.

Robust Multimodal Support for Complex Enterprise Applications

One of the most exciting innovations in Llama 3.2 is its multimodal support, enabling businesses to train models that simultaneously understand and process textual and visual data. This is particularly beneficial for companies that rely on complex data inputs like product recommendations, content moderation, or automated quality assurance based on visual inspection.

With Llama 3.2’s integration into Databricks, enterprises can now build and train these sophisticated models more easily. Mosaic ML’s pre-built modules and the MosaicML Composer provide plug-and-play solutions, where engineers and data scientists can build custom multimodal models without needing to reinvent the wheel. This not only shortens the development lifecycle but also ensures that businesses are using cutting-edge models for applications such as:

- E-commerce product cataloging (text and image integration)

- Autonomous driving (real-time video and sensor data processing)

- Healthcare diagnostics (combining medical images with patient history for better predictive analysis).

These advanced use cases rely heavily on the ability to simultaneously ingest and interpret diverse forms of data, which Llama 3.2 is designed to handle effectively within Databricks’ scalable environment.

Fine-tuning and Customization with Proprietary Enterprise Data

Another critical advantage of the Llama 3.2 and Databricks integration is the ability for enterprises to fine-tune models using their proprietary data. Databricks provides a secure and customizable environment that allows businesses to inject their own datasets into the model training process. This ability to customize and fine-tune the model is essential for creating domain-specific models that cater to unique business needs.

For example, a financial institution might fine-tune Llama 3.2 models on its internal transaction data to enhance fraud detection systems, while a retail business could use sales data to fine-tune models for predicting customer demand with greater accuracy.

Databricks’ secure data pipelines ensure that proprietary data remains encrypted and isolated throughout the model training and fine-tuning process, adhering to strict data governance policies. Additionally, the platform allows for the retraining of models as new data becomes available, ensuring that AI systems remain current and reflective of the latest market trends and operational realities.

With this level of customization, Llama 3.2 models become more than just AI tools—they transform into business-specific solutions that provide real-time insights and drive operational efficiency. OneGen AI, for example, leverages Databricks to offer custom AI solutions that take advantage of Llama 3.2’s open architecture, allowing businesses to tailor models to their operational needs without the prohibitive costs and risks often associated with proprietary AI systems.

Simplified Deployment and Enterprise-Ready Tools

One of the core strengths of Databricks is its ability to integrate AI into existing business workflows with minimal disruption. With Llama 3.2, businesses can quickly deploy models using Databricks’ APIs and low-code solutions, allowing developers and data scientists to maintain their existing workflows without significant adjustments.

The Databricks platform provides a unified approach to model management, from training to deployment, ensuring that AI models are not only easy to deploy but also easy to maintain and scale. Databricks offers a range of tools, including MLflow, for managing the lifecycle of Llama 3.2 models, from tracking experiments and managing datasets to deploying models in production environments.

This seamless integration is crucial for industries like manufacturing, where AI-driven solutions such as predictive maintenance or demand forecasting need to be deployed and updated regularly across global operations. With Databricks and Llama 3.2, businesses can scale their AI efforts quickly and efficiently without requiring specialized infrastructure or a complete overhaul of existing systems.

Scalable AI Innovation with Databricks and Llama 3.2

For companies looking to push the boundaries of AI innovation, the combination of Llama 3.2 and Databricks provides the ideal platform. Databricks’ multi-cloud compatibility allows businesses to run Llama 3.2 models across AWS, Azure, and Google Cloud, giving them the flexibility to leverage the best cloud services based on their specific needs.

Moreover, businesses can easily scale their AI efforts by using Databricks’ autoscaling clusters and built-in integration with cloud storage solutions, making it easier to train large-scale models without the constraints of traditional infrastructure.

Companies across industries, including finance, healthcare, media, and automotive, are already harnessing this integration to accelerate AI innovation, improve decision-making processes, and automate complex workflows. The collaboration between Meta and Databricks is a prime example of how industry leaders can come together to democratize access to powerful AI tools, enabling businesses of all sizes to benefit from state-of-the-art machine learning models.

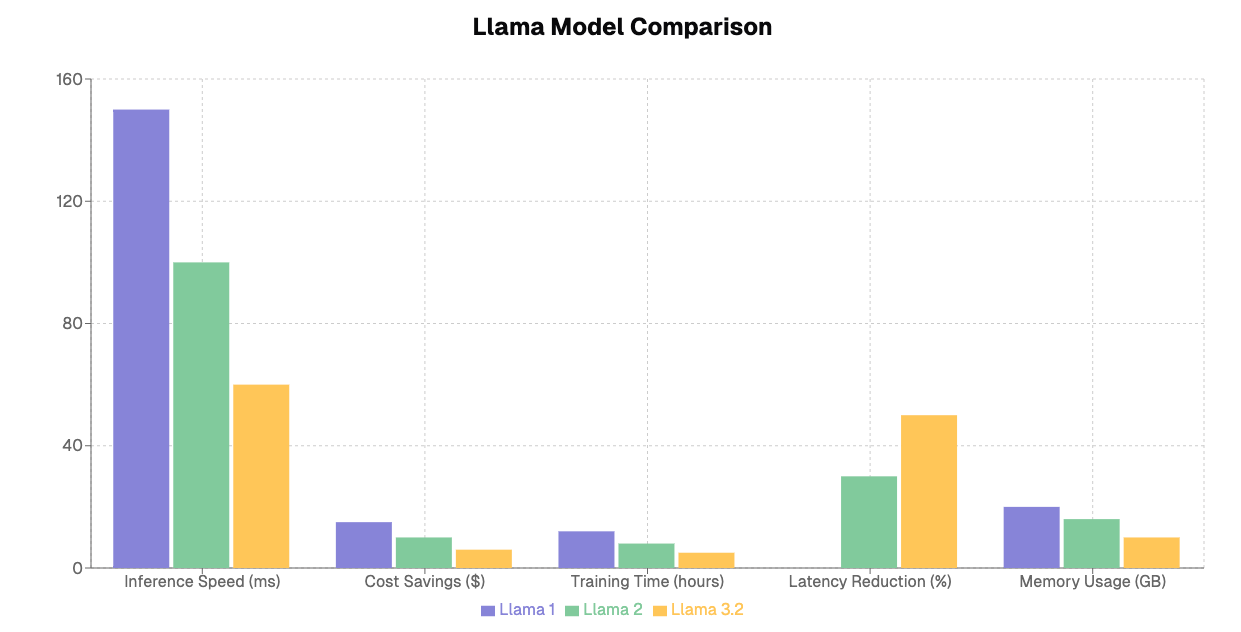

Comparison to Previous Llama Versions

While Llama 3.2 brings a significant leap in capabilities, it’s important to understand how this new release compares to its predecessors. The Llama 2 series already provided enterprises with robust large language models (LLMs), but Llama 3.2 takes those advancements even further by introducing more powerful multimodal capabilities, better resource efficiency, and a focus on edge deployment.

For example, Llama 2 was primarily designed for text-based applications and brought fine-tuned models into the spotlight. In contrast, Llama 3.2 introduces Llama-3.2-Vision, which offers visual understanding alongside textual capabilities, thus empowering new use cases in image recognition, video analysis, and even real-time edge AI for mobile applications. Additionally, Llama-3.2-Lightweight delivers faster inference at a lower cost, making it a compelling choice for companies with limited computational resources, while still benefiting from the accuracy and power of Llama models.

Speed and Efficiency: Where Llama 2 had challenges in real-time applications due to computational overhead, Llama 3.2 significantly reduces the latency and inference time by offering more efficient models for mobile and edge use cases. Early benchmarking studies show that Llama-3.2-Lightweight reduces computational costs by up to 30% compared to Llama 2, without sacrificing performance in complex tasks like natural language understanding or vision-based tasks. This makes it an optimal choice for businesses looking for more responsive AI applications.

Real-World Case Studies and Industries Benefiting from Llama 3.2

Llama 3.2’s adaptability makes it a strong candidate for various industries, allowing companies to unlock new efficiencies and capabilities through customized AI solutions.

Healthcare: In healthcare, Llama 3.2’s multimodal models can be leveraged to integrate both text and image data, enabling advanced medical diagnosis systems that analyze patient records along with radiological images for more accurate and efficient diagnosis. Hospitals can employ Llama-3.2-Vision to assist radiologists by offering real-time suggestions based on radiographic images, while the textual component processes and cross-references patient history.

Retail: Retail businesses benefit from Llama 3.2’s enhanced natural language processing for customer service chatbots and product recommendation engines. Llama’s ability to process multimodal input, such as customer photos alongside queries, improves personalized shopping experiences. Large retailers have successfully used Llama-3.2-Lightweight to scale these services to millions of users, optimizing response times and reducing operational costs.

Finance: In the finance sector, real-time fraud detection models based on Llama 3.2’s natural language and vision capabilities help banks process a mix of structured and unstructured data. Banks have integrated Llama 3.2 into their risk assessment engines, achieving higher accuracy in detecting fraudulent transactions without significantly increasing the cost or time needed for deployment.

Security and Compliance Features

Security is a crucial consideration for businesses adopting AI, especially in industries such as finance and healthcare. Llama 3.2, when deployed on platforms like Databricks or AWS Bedrock, comes equipped with enhanced security features. These include data encryption, role-based access controls, and compliance with key standards like GDPR, HIPAA, and SOC 2.

By running Llama 3.2 on Databricks, companies can ensure that their data is processed securely while still taking advantage of the model’s powerful capabilities. Additionally, the edge deployment options for Llama-3.2-Vision ensure that sensitive visual data, such as medical imaging or video surveillance footage, can be processed closer to the data source, minimizing latency and reducing the exposure of sensitive information.

For industries with stringent data protection regulations, this secure architecture allows AI innovation without compromising compliance.

How to Access and Download Llama 3.2 Models

Unlock the potential of Llama 3.2 by downloading the models through Meta’s strategic platforms. Both the lightweight models (1B and 3B) for on-device AI and the vision-enabled models (11B and 90B) for multimodal tasks are easily accessible.

Meta AI’s Official Site

Meta provides direct downloads of all Llama 3.2 models. Simply visit the official Llama website to access the specific model versions optimized for different deployment needs—whether it’s the text-based models for mobile integration or the larger vision models for advanced multimodal applications.

Hugging Face

As a go-to platform for AI practitioners, Hugging Face offers a user-friendly interface for accessing and deploying Llama 3.2 models. It’s especially convenient for developers already leveraging Hugging Face’s API and model hosting services, ensuring seamless integration into existing workflows.

Partner Cloud Ecosystems

For scalable deployment, Llama 3.2 models are pre-configured for development on key cloud platforms, including:

These partnerships allow for optimized performance, flexible scaling, and enhanced AI workflows, providing instant development environments for diverse use cases.

Actionable Steps:

Choose Your Model: Assess your project needs—use lightweight models (1B, 3B) for edge computing, or larger vision models (11B, 90B) for more complex AI tasks.

Select Your Platform: Whether you prefer Meta’s official site for the latest releases or Hugging Face for community-based support, ensure the model aligns with your system’s architecture.

Deploy on Cloud: Leverage integration with leading cloud providers like AWS or Azure for smooth scaling and implementation.

Access these cutting-edge models now and begin transforming your AI workflows with Llama 3.2.

Fine-Tuning and its Benefits: Empowering Ownership with Onegen AI

One of the most critical advantages of Llama 3.2 is its fine-tuning capabilities, which allow organizations to customize the model based on their proprietary datasets. Unlike closed-source models, Llama 3.2 enables enterprises to own the model weights after fine-tuning, providing a significant advantage in terms of data sovereignty, compliance, and competitive differentiation.

Fine-tuning allows companies to specialize Llama 3.2 for niche tasks, such as legal document analysis, medical diagnosis, or financial forecasting, without needing to build models from scratch. This customization ensures that businesses maintain control over their AI tools, adapting them to the specific language, tone, and knowledge required for their industry.

At Onegen AI, we are at the forefront of helping enterprises with these fine-tuning processes, ensuring models are safe, reliable, and cost-efficient. Our team of experts works closely with clients to tailor Llama 3.2 to their business needs, offering services like bias reduction, accelerated training, and real-time deployment. Through the use of open-source models, we ensure companies avoid the lock-in typically associated with proprietary LLMs while delivering results that are as accurate and fast as high-end closed models.

Additionally, Onegen AI offers infrastructure optimizations that make fine-tuning more affordable. By deploying lightweight models like Llama-3.2-Lightweight, enterprises can achieve significant savings on compute costs while still reaping the benefits of model customization.

Whether you are an enterprise looking to implement AI-powered customer service, or an industry leader developing bespoke AI-driven applications, Onegen AI ensures that your fine-tuned models are ready for production within days, not months.

Conclusion

In a world where AI is transforming industries, Meta’s Llama 3.2 models offer an unprecedented opportunity for businesses to adopt cutting-edge technology that scales across text, image, and multimodal tasks. From enhanced security features and ethical AI compliance to real-world applications across healthcare, retail, and finance, Llama 3.2’s flexibility makes it a powerful tool in enterprise AI strategy.

When combined with platforms like Databricks and services provided by OneGen AI, enterprises can achieve tailored AI models that not only enhance performance but also deliver cost-efficiency and long-term scalability. The future of AI is open, customizable, and enterprise-ready, and Llama 3.2 is paving the way for organizations to take their AI initiatives to the next level.