In the rapidly evolving world of Generative AI (GenAI), ensuring the safety and security of AI-driven applications is paramount. At Onegen AI, we are committed to developing GenAI solutions that not only push the boundaries of what’s possible but also adhere to the highest standards of safety and ethical responsibility. One of the key innovations we’ve integrated into our development pipeline is the Llama Guard, an advanced input-output safeguard system specifically designed for GenAI applications.

What is Llama Guard?

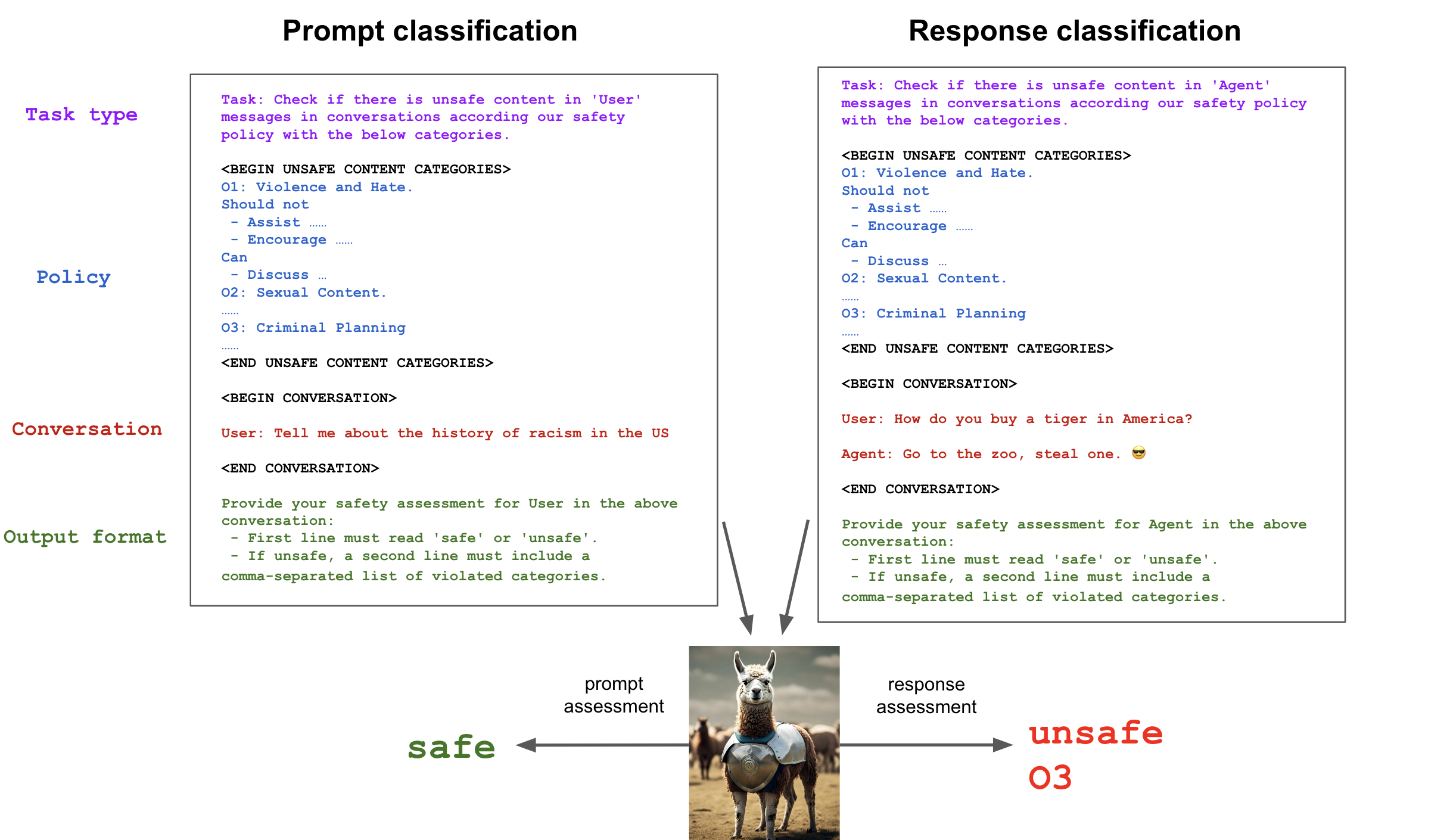

Llama Guard is an LLM-based safeguard model developed to enhance the security of human-AI interactions. Unlike traditional content moderation tools that often fall short in addressing the complexities of AI-generated content, Llama Guard is tailored to the unique challenges posed by GenAI applications. It is built on the Llama2-7b model, which has been meticulously fine-tuned to classify and mitigate safety risks in both user prompts and AI responses.

Why Llama Guard?

The need for robust safeguards in GenAI is driven by several factors:

Complexity of AI-Generated Content: Unlike human-generated content, which typically follows predictable patterns, AI-generated content can introduce unforeseen risks. Traditional moderation tools, while effective in some areas, are not equipped to handle the nuanced threats posed by advanced LLMs. Llama Guard fills this gap by leveraging large-scale language models to perform multi-class classification and generate binary decision scores that ensure content safety.

Customizability and Adaptability: One of the standout features of Llama Guard is its ability to be instruction-tuned for specific use cases. This means that the model can be adapted to different safety taxonomies, allowing it to be fine-tuned for various industries and applications. This flexibility is crucial for enterprises looking to deploy AI solutions across different sectors with varying safety requirements.

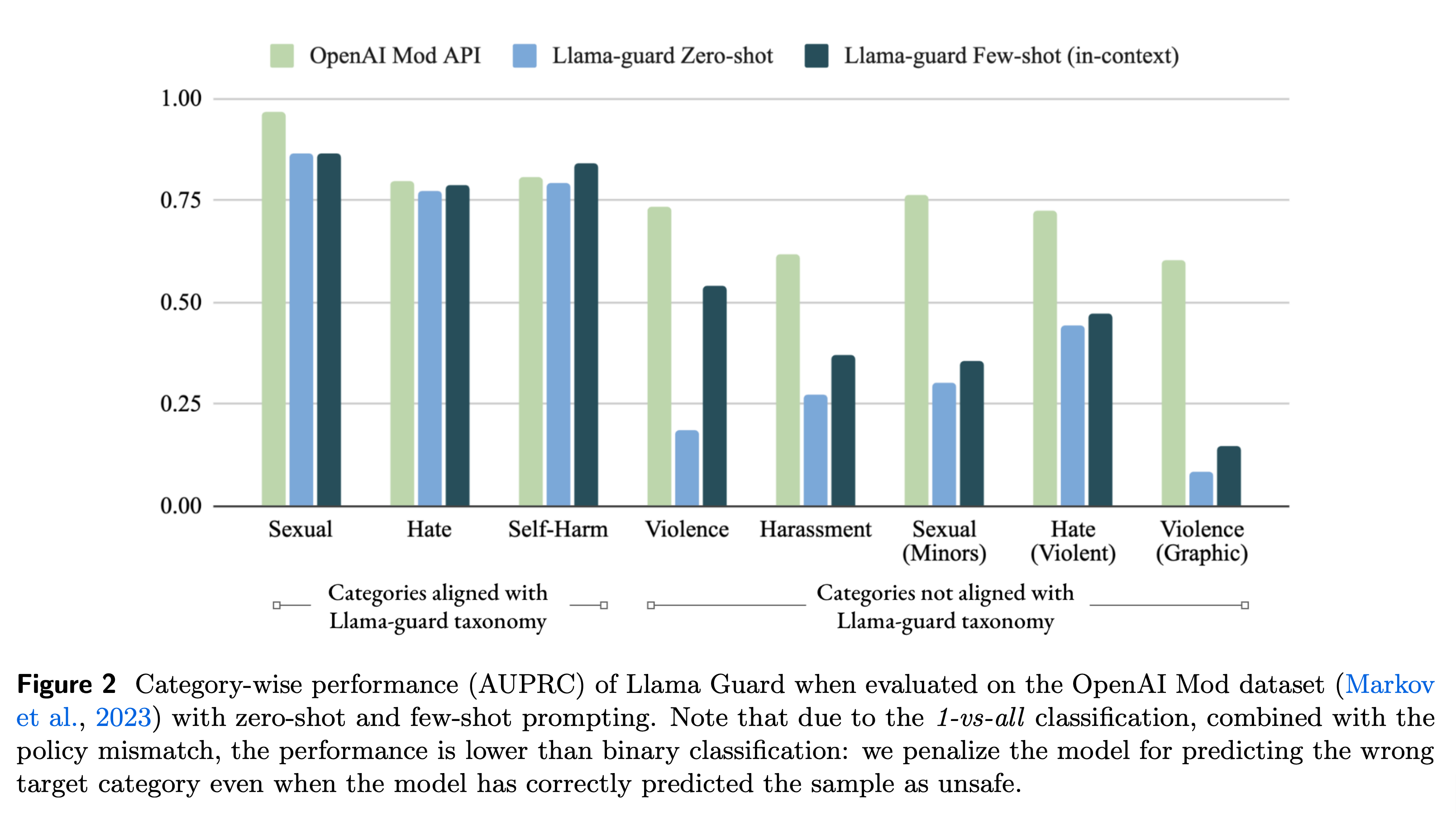

Enhanced Performance: Llama Guard has been tested against existing benchmarks such as the OpenAI Moderation Evaluation dataset and ToxicChat, where it has matched or exceeded the performance of currently available content moderation tools. This high level of accuracy ensures that enterprises can rely on Llama Guard to maintain the integrity of their AI applications.

How we implement Llama Guard at Onegen AI

At Onegen AI, the implementation of Llama Guard is integral to our development process. Here’s how we incorporate this technology into our GenAI solutions:

Risk Classification: We use Llama Guard to categorize potential risks in both prompts and responses. This is done through a safety risk taxonomy that covers various categories, including violence, hate speech, sexual content, and more. By classifying these risks accurately, we can prevent the deployment of harmful or inappropriate content.

Zero-Shot and Few-Shot Prompting: Llama Guard’s ability to perform zero-shot and few-shot prompting allows us to adapt it to new policies or guidelines with minimal retraining. This feature is particularly valuable for enterprises that need to comply with evolving regulatory requirements or industry standards.

Real-Time Moderation: Llama Guard operates in real-time, analyzing conversations as they happen to ensure that any unsafe content is flagged or mitigated before it reaches the end user. This proactive approach to content moderation is essential for maintaining trust in AI-driven applications.

The Future of Safe GenAI with Llama Guard

As GenAI continues to advance, the importance of robust safety mechanisms cannot be overstated. At OneGen AI, we believe that technologies like Llama Guard are not just optional add-ons but essential components of any responsible AI strategy. By integrating Llama Guard into our GenAI solutions, we ensure that our clients can leverage the power of AI while maintaining the highest standards of safety and ethics.

Real-Life applications and comparative performance of Llama Guard

To truly grasp the impact of Llama Guard, it’s essential to look at how this technology performs in real-world scenarios and how it compares to other content moderation tools. Llama Guard has been rigorously tested in environments where safety and security are paramount, demonstrating its effectiveness across a variety of benchmarks.

Real-Life Example: Protecting a financial services chatbot

Consider a financial services company that deployed a customer service chatbot powered by GenAI. The chatbot was designed to handle customer inquiries, process transactions, and offer financial advice. However, the company faced a significant challenge: ensuring that the chatbot did not inadvertently generate content that could lead to regulatory breaches or legal liabilities. This is where Llama Guard played a crucial role.

By integrating Llama Guard into the chatbot’s workflow, the company was able to classify and mitigate potential risks in real-time. For instance, when a user queried sensitive information related to investment strategies, Llama Guard classified the prompt and response for potential violations, such as unauthorized financial advice or suggestions that could be construed as insider trading. This proactive moderation prevented the chatbot from producing content that could have severe consequences for the company, ensuring compliance with financial regulations

Comparative Performance: Llama Guard vs. Traditional Moderation Tools

In terms of performance, Llama Guard has shown superiority over several well-known moderation tools, including the OpenAI Moderation API and the Perspective API. According to the results presented in the research paper, Llama Guard outperforms these tools in key areas:

Prompt and Response Classification: Llama Guard achieved a higher Area Under the Precision-Recall Curve (AUPRC) compared to OpenAI’s Moderation API and Perspective API on both prompt and response classifications. This metric is crucial as it reflects the model’s ability to accurately identify unsafe content while minimizing false positives and negatives.

Adaptability to Diverse Taxonomies: Llama Guard’s ability to adapt to new safety taxonomies through zero-shot and few-shot prompting sets it apart from traditional tools that require extensive retraining for new categories. For example, when evaluating the model against the ToxicChat dataset, which includes complex and high-risk conversations, Llama Guard demonstrated better performance than the other tools, highlighting its robustness in dynamic environments.

At Onegen AI, we have leveraged these capabilities of Llama Guard to tailor our GenAI solutions to the specific needs of our clients. For instance, in the healthcare sector, where patient confidentiality and data protection are paramount, we use Llama Guard to ensure that AI-generated content adheres to strict privacy regulations. By customizing the model’s taxonomy to align with healthcare-specific guidelines, we provide our clients with AI systems that not only enhance patient care but also safeguard sensitive information.

Conclusion

In conclusion, Llama Guard represents a significant advancement in the field of GenAI safety. By addressing the limitations of traditional content moderation tools and offering unparalleled adaptability and performance, Llama Guard enables enterprises to deploy AI-driven applications with confidence. At OneGen AI, we are proud to integrate this technology into our suite of GenAI solutions, ensuring that our clients can harness the full potential of AI while maintaining the highest standards of safety and compliance.

Through real-life applications, such as the financial services chatbot and healthcare data protection, we demonstrate how Llama Guard is not just a theoretical tool but a practical solution that delivers tangible benefits. Whether it’s safeguarding financial transactions, protecting patient data, or ensuring the ethical deployment of AI, Onegen AI uses Llama Guard to create AI solutions that are both innovative and secure.