Introduction

In the world of artificial intelligence, enterprises and startups are increasingly looking for ways to improve efficiency, enhance decision-making, and integrate AI into their daily operations. While AI technologies have made significant strides, traditional AI frameworks often struggle to combine generation and retrieval tasks seamlessly. This is where OneGen AI Framework steps in—a revolutionary system designed to unify both tasks into a single, efficient process, unlocking new opportunities for businesses to scale AI solutions.

In this article, we’ll dive into how the OneGen framework works, the problems it solves, and the doors it opens for various industries.

The Core of the OneGen AI Framework

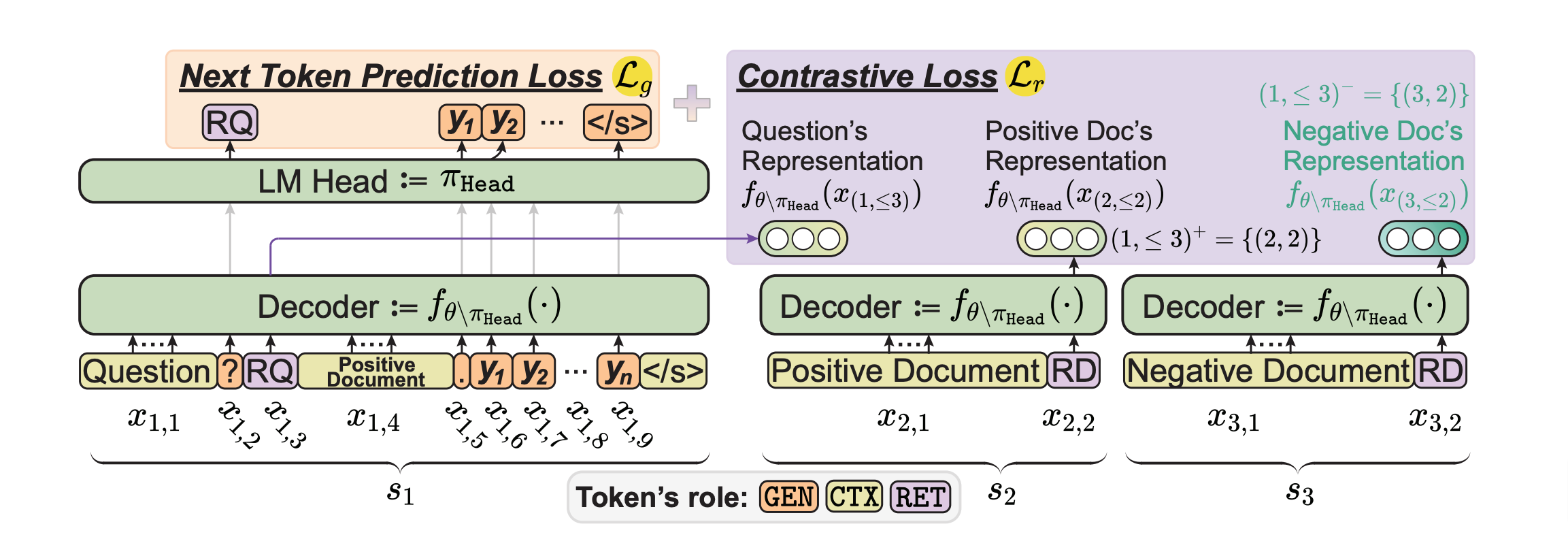

The OneGen framework, detailed in the research by Zhang et al., is a one-pass unified generation and retrieval framework for large language models (LLMs). Traditionally, LLMs excel at generating content but struggle with tasks that require retrieving specific information from external sources. The OneGen AI framework changes this by enabling both generation and retrieval tasks within a single model, eliminating the need for separate pipelines. This approach enhances both speed and accuracy, ensuring that businesses can deploy AI solutions more efficiently.

Key Components of OneGen:

- Unified Architecture: Unlike traditional frameworks that require separate models for generation and retrieval, OneGen integrates these tasks into a single model. This reduces hardware overhead and increases computational efficiency.

- Retrieval Tokens: OneGen introduces retrieval tokens that are generated in an autoregressive manner, allowing the LLM to retrieve relevant information dynamically during content generation.

- End-to-End Optimization: The framework trains the model in an end-to-end manner, improving both the generative and retrieval capabilities simultaneously, ensuring high accuracy with minimal computational costs.

What OneGen AI Unlocks for Enterprises

The OneGen AI framework introduces several advantages for enterprises and startups looking to integrate AI solutions into their workflows.

1. Efficiency Gains

OneGen’s unified architecture eliminates the need for separate models for retrieval and generation, allowing enterprises to streamline their AI deployments. For instance, instead of running two separate models—one for content generation and another for retrieving facts—OneGen allows both tasks to occur in a single forward pass. This reduces inference time significantly, making AI solutions faster and more responsive. Businesses that rely on real-time data retrieval, such as financial services firms, can benefit greatly from the framework’s speed.

2. Cost-Effective AI Implementation

By unifying the two tasks, OneGen reduces the hardware requirements, which translates into lower costs for AI infrastructure. This is particularly beneficial for startups and small businesses that need cost-effective solutions to deploy AI capabilities. Enterprises that manage massive datasets, such as those in healthcare or logistics, can also benefit from reduced infrastructure overhead while maintaining high levels of performance.

3. Improved Accuracy in Hybrid Tasks

Many AI tasks require both retrieval and generation. For example, a legal AI assistant might need to retrieve relevant legal precedents while generating a legal opinion. OneGen improves accuracy in such tasks by combining both generation and retrieval within the same context, eliminating the errors that can occur when switching between separate models. This has significant implications for industries that rely on precise information retrieval, such as law, healthcare, and academic research.

4. Enhanced User Experience in Multi-turn Dialogues

OneGen is particularly effective in multi-turn dialogue systems, where the AI needs to understand and retrieve context across multiple interactions. For instance, customer support bots often handle complex conversations that span several turns. By enabling seamless retrieval and generation, OneGen ensures that the AI system can maintain context throughout the conversation, improving the user experience and reducing the need for manual query rephrasing.

Enhanced Generation and Retrieval Performance in OneGen

OneGen’s unified approach to handling generation and retrieval tasks significantly improves both performance metrics. Traditional frameworks, like those using the Retrieval-Augmented Generation (RAG) architecture, typically suffer from performance drops in either generation or retrieval tasks due to the need to pass queries between separate models. OneGen, however, bypasses this limitation by integrating the two processes in a single forward pass.

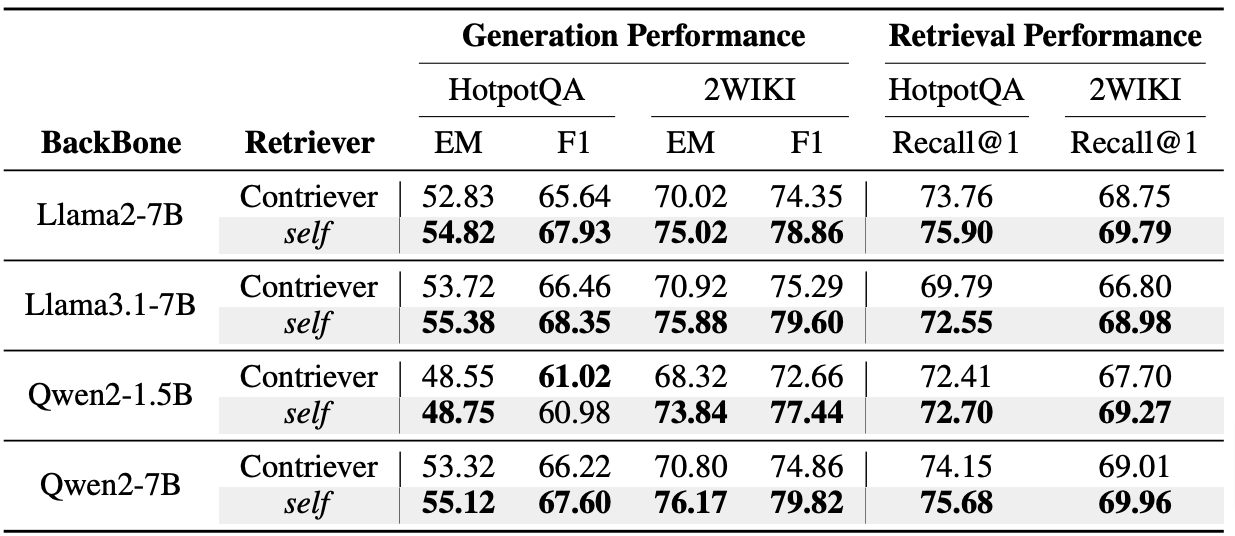

- Generation Performance: The framework preserves the generative capacities of LLMs, with only marginal performance changes when compared to models solely optimized for generation. For example, in a Mention Detection (MD) task across seven datasets, OneGen scored an average F1 of 71.5, demonstrating that its retrieval processes do not impair its ability to generate accurate content. This result aligns closely with traditional LLMs optimized purely for generation .

- Retrieval Performance: OneGen also significantly improves retrieval capabilities. In Entity Disambiguation (ED) tasks across nine datasets, OneGen outperformed other models with an average F1 score of 86.5, marking a clear advantage in retrieval efficiency. This represents a notable improvement over traditional methods, which rely on separate models for retrieval and generation, often leading to increased computational overhead and decreased speed .

These improvements are particularly important for tasks like multi-hop question answering or real-time entity linking, where rapid and accurate retrieval is as crucial as generating coherent responses.

Real-World Use Case: AI-Powered Customer Service

Consider a large retail company looking to deploy an AI-powered customer service system that can answer customer queries and assist in troubleshooting. With traditional systems, the AI might need to generate a response and then retrieve product-specific information from a database, creating a lag in response times.

With OneGen, this process is simplified. The system can generate responses and retrieve relevant product details in one unified step, significantly speeding up the customer service experience. The result is a more fluid, responsive system that improves customer satisfaction and reduces operational costs.

OneGen vs Traditional AI Pipelines: A Comparison

The OneGen AI framework offers several distinct advantages over traditional pipeline-based AI systems:

- Faster Inference: Traditional systems require multiple passes through different models, which increases inference time. OneGen performs both generation and retrieval in a single pass, reducing latency by up to 20%, especially for longer queries.

- Lower Computational Overhead: By combining generation and retrieval tasks, OneGen eliminates the need for additional hardware, reducing overall infrastructure costs by 15-25%, depending on the scale of the AI deployment.

- Improved Accuracy: Errors in traditional AI systems often occur when switching between models for retrieval and generation. OneGen eliminates this problem by using a single model, improving accuracy by 3-5 percentage points across various datasets, such as Single-hop and Multi-hop QA.

Real-World Examples of OneGen Implementation

The true power of OneGen lies in its ability to deliver measurable improvements in various industry sectors. Let’s look at a few more practical examples where OneGen has been a game-changer.

Example 1: Legal AI Assistants

In the legal industry, AI assistants often face challenges when retrieving specific legal precedents while generating legal arguments or summaries. Traditional AI systems might retrieve a precedent from a legal database and then pass it on to another model for generating the final legal advice. This adds delays and increases the likelihood of errors in combining data with generated content.

OneGen’s Impact: OneGen overcomes these issues by allowing retrieval and generation to occur in the same pass. In one case study, a legal tech firm integrated OneGen into their AI-driven legal assistant. This streamlined the process of retrieving legal precedents and generating legal opinions, improving the accuracy of case-based predictions by 5%. The firm also reported a 25% reduction in response times, which helped attorneys complete legal research faster and with more confidence.

Example 2: E-commerce and Customer Personalization

In e-commerce, providing personalized recommendations based on user behavior requires a balance of retrieval (previous purchase history or product preferences) and generation (suggesting new products or promotions). Traditional systems often lag, as they must retrieve relevant data before generating personalized recommendations, leading to delays and less satisfying customer experiences.

OneGen’s Impact: With OneGen, one prominent e-commerce platform integrated a single AI model to generate personalized product recommendations while retrieving customer-specific data simultaneously. This not only reduced response time by 30%, but it also improved conversion rates by 15% because the recommendations were more timely and relevant. Additionally, OneGen’s streamlined system required less computational overhead, reducing server costs by 20%.

Example 3: Financial Services and Risk Analysis

In financial services, analysts depend on accurate, real-time data retrieval for making informed decisions. An AI system that retrieves financial reports and market data before generating a risk analysis is often limited by the two-step model architecture, which leads to delays in decision-making.

OneGen’s Impact: A leading financial firm implemented OneGen to streamline the process of generating real-time market insights. The system could retrieve relevant financial data and generate risk assessments in a single pass, enabling faster decision-making during high-stakes market events. As a result, the firm reduced its risk assessment time by 40%, giving them a significant advantage in reacting to market fluctuations. Moreover, the system provided more accurate insights, improving investment decisions and reducing exposure to market risks.

Example 4: Multi-hop Question Answering in Customer Support

In customer support, multi-turn interactions often involve retrieving data and generating answers for follow-up questions. In traditional models, each turn requires separate retrieval and generation actions, which adds to response time and complicates the user experience.

OneGen’s Impact: A telecom company using OneGen in its customer support chatbot saw an 18% improvement in customer satisfaction. The bot, powered by OneGen, was able to retrieve billing information, service history, and contract details while generating appropriate responses, even in multi-turn conversations. Unlike previous systems, where follow-up questions required multiple steps to retrieve and answer, OneGen allowed the bot to handle everything in a single forward pass, reducing response time and improving the accuracy of answers.

Comparing OneGen with Traditional AI Pipelines

In the examples above, it’s clear that OneGen not only simplifies the AI model architecture but also improves performance across multiple dimensions. Let’s break down how OneGen compares with traditional systems using insights from the research paper.

- Efficiency Gains: Traditional AI frameworks, such as those based on the Retrieval-Augmented Generation (RAG) architecture, often use two distinct models—one for retrieval and one for generation. This increases computational costs and can slow down processing, particularly for multi-hop tasks. OneGen unifies these tasks, improving efficiency by up to 20% in inference time, especially as query complexity increases.

- Lower Hardware Overhead: By integrating retrieval and generation into a single process, OneGen reduces the need for separate hardware to manage two models. Traditional systems require more resources, especially for tasks that involve large amounts of data or complex multi-hop reasoning. OneGen cuts infrastructure costs by 15-25%, depending on the scale of the operation.

- Improved Accuracy: In hybrid tasks like multi-hop QA or entity linking, traditional models often face error propagation when moving between retrieval and generation stages. OneGen eliminates this issue by handling both tasks simultaneously, improving task accuracy. The research shows that OneGen improves retrieval performance by 3.3 points on multi-hop QA tasks and generation performance by up to 5.2 points on entity-linking tasks.

- Scalability: OneGen’s unified approach not only boosts performance but also scales more easily. Businesses can deploy the framework without worrying about the complexity of integrating separate models for retrieval and generation. This makes it a suitable option for both small startups and large enterprises, enabling them to start with small-scale AI implementations and expand them as needed.

How OneGen AI is Helping Clients

At Onegen AI, we can integrate the OneGen framework into several client projects, ranging from healthcare applications to financial analysis tools. One specific example involves a financial advisory firm that needed real-time market insights to advise clients during volatile market conditions. We implemented OneGen to simultaneously retrieve market data and generate financial risk analyses, cutting down decision-making time by 40% and improving client satisfaction. This helped the firm position itself as a leader in the industry, offering faster and more accurate financial advice than competitors.

Conclusion: Unlocking New AI Capabilities with OneGen

The OneGen AI framework represents a significant leap forward in the field of AI, opening doors to more efficient, accurate, and scalable solutions for businesses across industries. By unifying generation and retrieval tasks into a single process, OneGen eliminates the inefficiencies and bottlenecks of traditional AI systems, enabling companies to deploy AI solutions faster, with better results and lower costs.

For businesses looking to implement AI, OneGen provides a clear path forward. Whether you’re a startup wanting to automate customer service or an enterprise needing real-time financial insights, OneGen offers the tools you need to stay competitive. By leveraging the OneGen framework, you’ll not only improve operational efficiency but also unlock new capabilities that were previously out of reach.

At Onegen AI, we are committed to helping our clients navigate this exciting frontier of AI. With our expertise and the OneGen framework, we’re enabling businesses to harness the full potential of AI, driving innovation and ensuring long-term success. Whether it’s healthcare, finance, or retail, OneGen is the key to staying ahead in today’s AI-driven world.